Proxies for egoism

A rough collection of thoughts relating to egoism and altruism

Until I was ~16, I used to believe that there was no altruism and that everything anybody does is always for purely egoistical reasons. As far as I can remember, I grew confident of this after hearing an important childhood mentor talk about it. I had also read about the ideas of Max Stirner and had a vague understanding of his notion of egoism.

I can't remember what made me change my mind exactly, but pondering thought experiments similar to this one from Nate Soares played a significant role:

Imagine you live alone in the woods, having forsaken civilization when the Unethical Psychologist Authoritarians came to power a few years back.

Your only companion is your dog, twelve years old, who you raised from a puppy. (If you have a pet or have ever had a pet, use them instead.)

You're aware of the fact that humans have figured out how to do some pretty impressive perception modification (which is part of what allowed the Unethical Psychologist Authoritarians to come to power).

One day, a psychologist comes to you and offers you a deal. They'd like to take your dog out back and shoot it. If you let them do so, they'll clean things up, erase your memories of this conversation, and then alter your perceptions such that you perceive exactly what you would have if they hadn't shot your dog. (Don't worry; they'll also have people track you and alter the perceptions of anyone else who would see the dog, so that they also see the dog, so that you won't seem crazy. And they'll remove that fact from your mind, so you don't worry about being tracked.)

In return, they'll give you a dollar.

I noticed that taking the dollar and getting the dog killed would be the self-interested and egoistically rational choice, but I cared about the dog and didn't want him to die!

Furthermore, psychological egoism bugs me because its proponents often need to come up with overly complicated explanations for seemingly altruistic behaviours. My belief in the importance of Occam's razor led me to conclude that often the simplest explanation of certain behaviours is that they are "just altruistic."

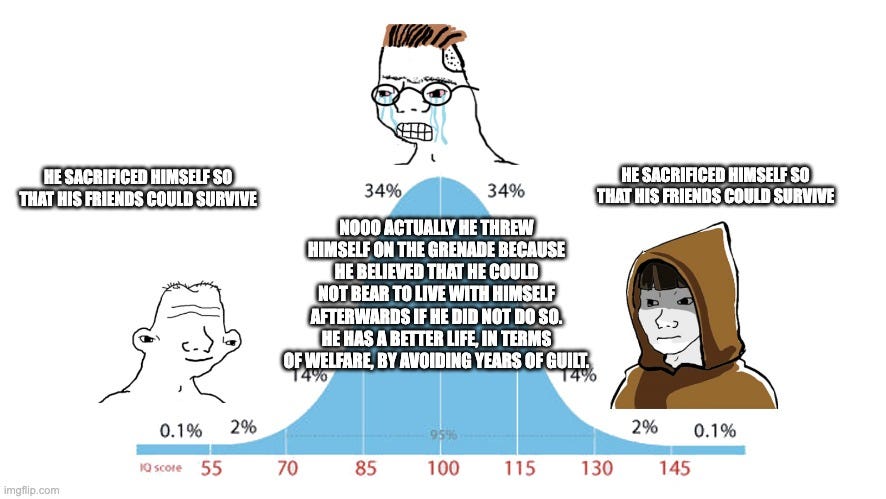

For example, say a soldier throws himself on a grenade to prevent his friends from being killed. Psychological egoists need to come up with some convoluted reason for why he did this out of pure self-interest:

Even though I have come to believe that “real” altruistic behaviour exists, I still believe that pure altruism is rare and that I take the vast majority of my actions for egoistical reasons. The reasoning behind this often boils down to somewhat cynical explanations à la The Elephant in the Brain. For example, I engage a lot with effective altruism, but my motivation to do so can also largely be explained by egoistic reasons.

While pondering this whole issue of egoism, I wondered whether I could come up with a self-measure of how egoistic I am. Two potential proxies came to mind:

Proxy 1: Two buttons

Consider the following thought experiment:

You are given the option of pressing one of two buttons. Press button A and you die instantly. Press button B and a random person in the world dies instantly. You have to press one of them. Which one do you choose?

Let's take it up a notch:

Imagine that when you press button B, instead of one random person dying, two random people die. We can increase this to any positive number n. At what number n of people—that would die at the press of button B—would you press button A instead of button B?

Now consider a different version:

You are given a gun and the choice to take one of two actions. You can either shoot yourself in the head or shoot a random person from somewhere in the world in the head that is suddenly teleported to stand in front of you. You have to take one of those two actions. Which one do you choose?

Let's take it up a notch again:

You are given a gun and the choice to take one of two actions. You can either shoot yourself in the head or shoot n random people from somewhere in the world in the head that are suddenly teleported to stand in front of you. At what number n of people, that you would need to shoot in the head, would you prefer to shoot yourself?

In a consequentialist framework, both decisions result in the same outcome. Notice that they feel very different, though. At least they do so for me.

Proxy 2: Hedonium

Imagine being offered the opportunity of spending the rest of your life in hedonium. What I mean by hedonium is being in a state of perfect bliss for every second of the rest of your life. Variations of this thought experiment and related concepts are the experience machine, orgasmium, and wireheading. Let's say we take the valence of the best moment of your life, increase its pleasure by a few orders of magnitude, and enable you to stay in that state of boundless joy until you cease existing in ~100 years.

The only downside is that while all this pleasure is filling your mind, your body is floating around in a tank of nutrient fluid or something. You can’t take any more actions in the world. You can’t impact it in any way. Opting into hedonium could therefore be considered egoistic because while you are experiencing pleasure beyond words, you wouldn’t be able to help other people live better lives.

How likely would you be to accept the offer? Consider how tempting it feels, whether your gut reaction is to accept or decline it outright or whether it seems more complicated.

I hypothesise that giving a larger number of n in the first proxy and a higher probability of opting into hedonium roughly correlates with being more egoistic. I explicitly choose not to give my reactions to these thought experiments to not bias anyone, but I invite readers to leave their responses in the comments.